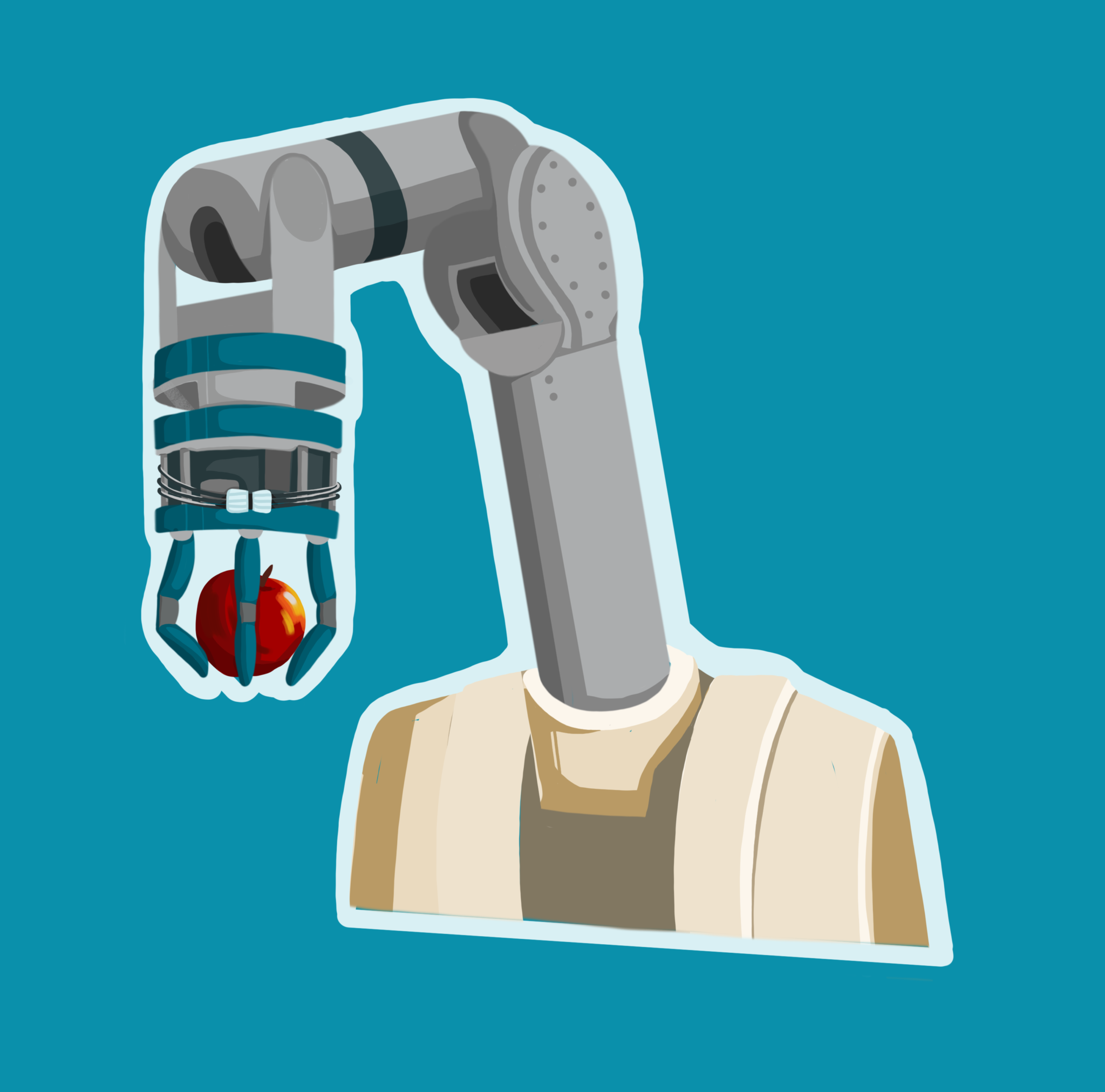

Freed to fidget, robotic hands manipulate everyday objects

Yale Researchers teach robotic hands to fidget, increasing object adaptability and movement control, in newly published research.

Yale Daily News

When robotic hands — traditionally designed to perform perfectly precalculated steps — are programmed by the Yale GRAB Lab to continuously alter their grip, fidgeting promotes adaptability to the variety of objects and movements required to navigate the real world.

Forty-five percent of the human motor cortex is dedicated to hand manipulations. Designing a robot hand to mimic human dexterity is a challenging task, but the GRAB Lab at Yale, led by professor Aaron Dollar, has made a significant breakthrough. Through employing soft hands, which can bend to their environment without being sent a motor command, the robot can adapt to miniscule errors in real time. This allows the hands to manipulate multiple types of objects, unlike the majority of published hand designs that are specifically trained for a single task. Furthermore, the hand is multimodal, meaning it combines multiple types of movement, such as rotational and translation. The novel design is published in IEEE Robotics and Automation Letters.

“Our system works on several different objects, such as toy bunnies and toy cars, which have different surfaces, topologies, and textures,” said Bowen Wen, Computer Science PhD student at Rutgers and a collaborator of the GRAB Lab. “This means our system is robust to different scenarios.”

Generally, object manipulation is achieved through fixed points of contact with robotic hands, according to Andrew Morgan, a PhD student in the GRAB Lab at Yale and first author on the paper. However, humans fidget, adjusting their grip and continuously detaching and reattaching digits to finish a movement. This paper outlines novel algorithms to determine when it is a good time to alter points of contact to an object, a technique known as finger gaiting, culminating in robots that better mimic human movement patterns.

Adaptability is crucial to the human hand. Morgan provided the thought experiment of a fingertip on a wooden table. As there is friction with the surface, the finger does not slide. However, if there was a sheet of paper between the fingertip and the wood, the finger would suddenly slide very far. Properties like this are very difficult for a computer to predict. Given the unstructured nature of life, robotic hands can not rely purely on sensors to function.

“The real world is always going to act differently than a simulation,” said Morgan. “Things change according to lots of conditions, such as humidity in the air. Building better hardware that is able to take up the slack is incredibly useful to robotics.”

The softness of the underactuated hands used in this work enabled the development of complex manipulation algorithms that require few system parameters to be known. This makes the robot hands generalizable to a large set of in-hand manipulation tasks. The robot hand successfully picked up and rotated a beach ball, toy car, bunny and plastic duck to a goal configuration. Each object had a different coloring, texture, compressibility, and geometry.

Furthermore, in traditional hard hands, a small error can compound into the ejection of an object. Soft hands are much more forgiving to errors without sacrificing control. It is impossible to perfectly mimic the 21 degrees of freedom in the human hand with current technology, so a successful robotic hand must be malleable to environmental feedback.

The decoupling of the perception and planning aspects of the programming not only promote adaptability, but also decrease training time. While a traditional model must be trained for weeks on supercomputers, this hand only requires two days of computing time on a personal computer to function.

“I believe compliance, or also called softness, of robot manipulators will bring a lot of new possibilities for robot manipulation that are otherwise impossible.” said Kaiyu Hang, assistant professor of computer science at Rice University and former postdoc in the GRAB Lab. “If we look at how humans manipulate objects, we do not always try to manipulate everything very precisely at every step. Instead, we almost always use many imprecise actions and fix the small errors along the way to finally achieve manipulation goals.”

The Yale GRAB Lab created a video showing their robotic hand in action.

The paper outlining the novel finger gaiting algorithm was authored by Andrew S. Morgan, Kaiyu Hang, Bowen Wen, Kostas Bekris and Aaron M. Dollar.