A Chobani in one hand and a spoon in my mouth, I open my laptop and go to Coursera.org. I click on “Sequence Models” in my course catalog. Though I have been awake for less than 10 minutes, I feel a wave of adrenalin break in my stomach. Today, I will finish the 5-course Deep Learning Specialization on Coursera, the world’s largest massive-open-online-course platform. I put my earphones on: “In this week, you hear about sequence-to-sequence models, which are useful for everything from machine translation to speech recognition.”

An hour and a half later, Andrew Ng, in the same blue shirt that he wore to over one hundred videos of the course, says goodbye with these final words: “Now that you have this superpower, I hope you will use it to go out there and make life better for yourself but also for other people.”

I close my Chrome browser and look out my window at the intersection of Crown and Church streets: “Get Carter” buses heave their way through the rain, Gateway Community College kids in parkas huddle outside Rite Aid, people go about their lives on a gray Monday morning. I miss him already, his Singaporean accent, his asymmetric eyes, the way he says “Don’t worry about it if you don’t understand this, we’ll come back to it later.” Thinking about it, I realize that other than with my wife, I spent most of the past week with Andrew Ng, or the virtual version of him. The silence of the apartment is like the stillness that arrives after finishing a Netflix series, hollow and slightly incapacitating. But I have learned something useful this time, something that will last. I am now a data scientist.

After my third year at medical school, I decided to take an extra year to do research instead of going straight into my final year. There were many reasons for this, including my exhaustion from rotating at the hospital and my desire to bring artificial intelligence to the field of medicine. It was jarring that, while the rest of the world was rolling along with Amazon Alexas, SpaceX drones and Google Translate, I was comparing a patient’s medical chart with a handwritten list of medications, inputting values with my finger on an online calculator to estimate a patient’s 10-year risk of a stroke, and learning archaic physical exam maneuvers as if I were apprentice to some dark magic. I felt like Tony Stark transported back to the stone age. Where was my Jarvis, my R8, my Ironman suit?

I remember my first rendezvous with AI. In September 2013, during my senior fall at Stanford University, I sat in NVIDIA Auditorium at Huang Engineering Center for the first lecture of CS229: Machine learning. The 300-seat auditorium was hopelessly small for the number of people who showed up. With 760 students, this was the largest course at Stanford. In fact, CS229 would later become the most popular course on Coursera, which Andrew Ng had founded the previous year with his Stanford colleague Daphne Koller. Professor Ng, the real version of him this time, began the class with a slide that showed the departments represented by the students enrolled in the class. From the tiny font that filled the screen, I was assured that I, a biology major, was not the only stranger to this city. Many had flocked to the new El Dorado.

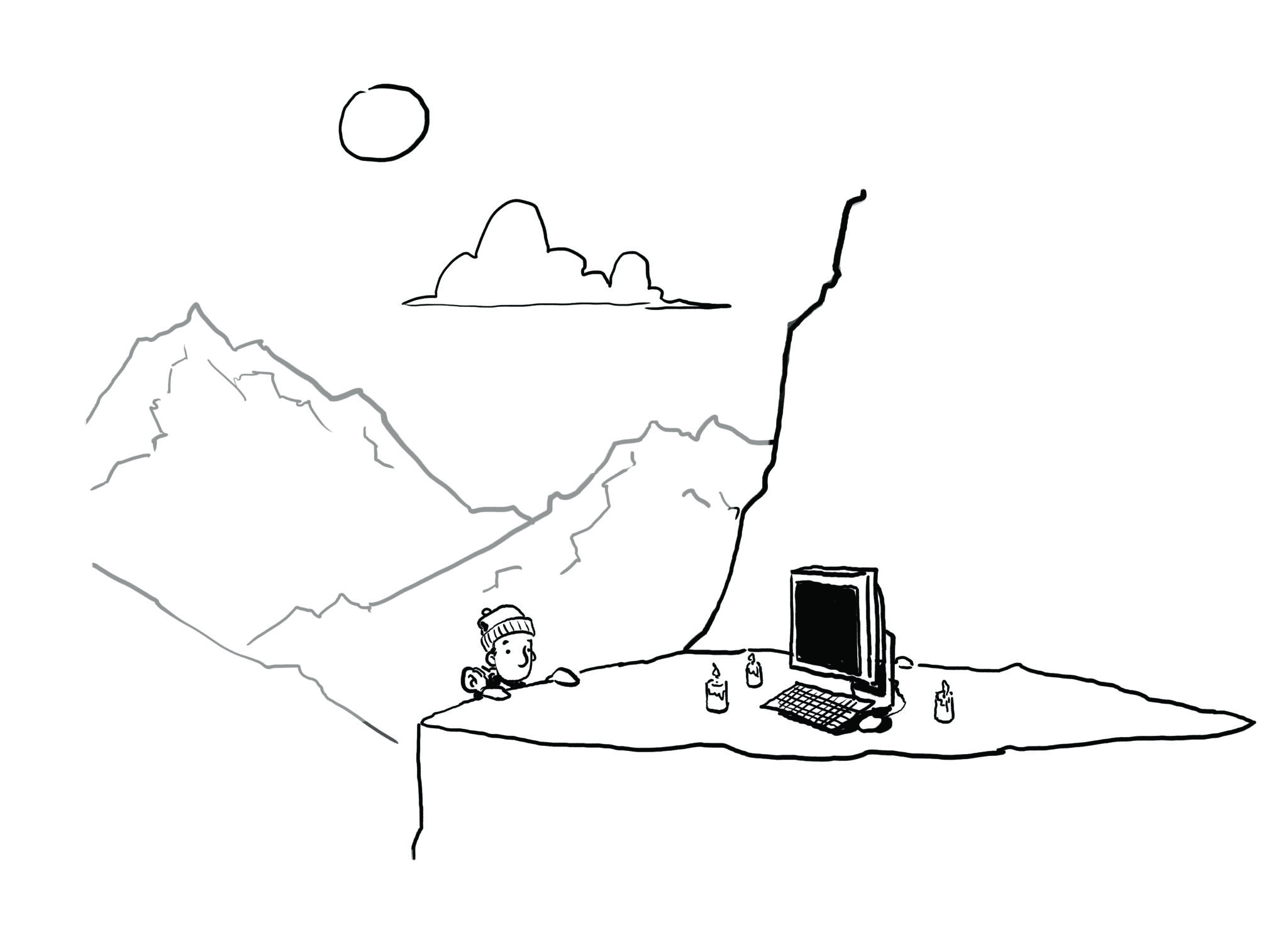

_______

Four years later, I stowed away my white coat and prepared to be reborn into an AI guru. As part of my research, I had access to enough medical record data to spawn a health care startup. I started by learning Python, the most popular programming language in artificial intelligence, then switched over to R. I blazed through multiple specializations on Coursera. What I did not find on Coursera, I found on YouTube, and when YouTube failed me, I had Google Search, which usually took me to Stack Overflow. If all failed, I cooled myself down with a Chobani and went to office hours at the Yale Center for Research Computing.

Slowly, I made progress. I learned how to use version control with git, became fluent in regular expressions, submitted batch jobs on high performance clusters, ran Tensorflow on graphics processing units, and once, when I was in bed about to slip into a dream, saw bits of code flashing in the darkness of the room. After more the 100 hours on Coursera and double that many hours on YouTube, thousands of Chrome tabs and a research paper submitted for peer-review, I think I can hold a charming conversation at a cocktail party in the Valley.

I was not alone in my makeover — Yale was also rebranding itself. In 2015, the Department of Computer Science was expanded and incorporated into the School of Engineering and Applied Science. In 2017, the Department of Statistics was renamed the Department of Statistics and Data Science. In January of this year, the Center for Biomedical Data Science was established at the School of Medicine. The two classes I audited this year, S&DS365 “Data Mining and Machine Learning” and CPSC477 “Natural Language Processing” were both taught by new faculty recruited from other institutions. According to a Medium article, the enrollment in core computer sciences classes at Yale has tripled in the last five years. Seeing kids in CS50 T-shirts walk past me on Prospect Street, I find myself wondering if I am crossing Main Quad at Stanford. The blast of cold wind brings me back to my senses.

_________

Sometime during all this, in August 2017, Andrew Ng announced “deeplearning.ai” which in his words is “a project dedicated to disseminating AI knowledge.” The project was essentially a new 5-course specialization on Coursera for deep learning. I knew that deep learning has been widely used in commercial applications such as Facebook’s face recognition system as well as in cutting-edge research that sought to elevate AI from its secretarial role. For example, Deepmind’s AlphaGo had used deep learning to beat the best human players in a game with a search space greater than the number of atoms in the universe.

The phrase “deep learning” brings my mind to the ocean, not the warm light-shimmering shallows of Miami Beach, but the cold impenetrable trenches of the Pacific Ocean deep enough to sink the Empire State Building. In his welcome video on Coursera, Ng, in his soft, unassuming voice, beckoned me to join him in “an opportunity to build an amazing world, amazing society, that is AI powered.” I dipped in my toes, then pushed myself off.

I resurfaced months later, still alive. I learned what gradient descent, forward-prop, back-prop, dropout, convnets, resnets, GRUs and LSTMs mean, how to implement them using APIs like Keras. Deep learning was simpler than I had thought: It was a bunch of generalized linear models stacked on top of each other, plus a variety of nonlinear transformations. In fact, multilayered neural networks, which have now been rebranded as deep learning, had been around since the 1980s.

Regardless, there is a kind of mesmerizing beauty to deep learning. Imagining the gradients shuffle back and forth across millions of parameters in a large network is like watching an army of ants under a rock you accidentally unearthed. One ant can accomplish nothing by itself, but a million ants can self-allocate tasks, regulate foraging and find optimized paths to food sources with a kind of collective intelligence. Such phenomena illustrate the concept of emergence, where complexity arises from simple, even binary rules. The name “neural network” comes from the intuition that this is how our brains might learn to map sensory input into knowledge. Wire up one billion neurons, you get a monkey. Wire up 10 billion, you get homo sapiens. With enough quantity, you get quality.

Plus, the thing works. It outperforms all existing algorithms on homogenous data types like image and audio. In the field of natural language processing, deep learning using vector representations of words replaced decades of hand-built algorithms based on linguistic theory. Even the medical profession, which has traditionally been resistant to change, is becoming to accept the role of AI in diagnosis and treatment, with research using deep learning increasingly visible in top-tier journals.

___________

“I am a data scientist,” I repeat the words to myself. Despite all I have learned, I feel strangely empty. There is no fanfare, no banner that says “Welcome to Life 2.0, Powered with State-of-the-art Deep Learning”. Maybe it is the weather, I tell myself and close my laptop. It feels like I have returned from a package tour to Europe and have come home more sleep-deprived, like I have missed some grand lesson.

Like traveling, education is often the most rewarding when it offers a new orientation to the world, or “a momentary stay against confusion,” as Robert Frost had once defined poetry. My past eight months were devoid of such stays. In retrospect, the whole affair was like a brouhaha around a pile clothes on Black Friday. Had I expected too much from an online course with a virtual professor and auto-graded assignments? Yet, the highfalutin terms thrown around in TED Talks and TechCrunch articles seemed to promise something greater.

My letdown may have been due not only to the mode of study, but also to something inherent to the material. Many have criticized the unbridled optimism that surrounds deep learning, or even the entirety of data science. Statistician David Donoho observes in his essay “50 Years of Data Science” that there is no consensus on what data science actually is. One could perhaps describe data science as a rushed merger between statistics and computer science with a brutal emphasis on practicality. The field’s use of the word “skills” rather than “knowledge” reflects its commercial nature. Capitalizing on the demand of the job market, many universities, including Yale, have established new centers and have started to offer degree programs. Data science is now the gold rush in academia.

And I realize as I write: I felt empty because my passion for AI was not genuine but was rooted in the fear of falling behind, because deep in my heart, while I invoked the inefficiency of the health care system and the potential benefits to patient care, I was driven as much by the thirst for power and a childish sense of superiority.

________

Regardless of how I feel, AI is changing my daily life. After I finish writing this article, I will take a long-due shower with my iPhone on YouTube autoplay, which sifts through playlists of millions of users to find songs that I might like. Because I don’t pay $10 a month for the ad-free version, I will have to suffer one or two targeted ads that were chosen based on my search history. I also need to go to Costco for groceries, and I will be using Google Maps though I know how to get there, just in case there is an unexpected slow-down.

Google Maps is a good analogy for the world I live in: mapped down to details, no area unexplored, every restaurant, hotel and state park rated something out of 5. I have gotten used to taking the shortest path between any two points, to dining at the highest Yelp-reviewed restaurants. Optimization appeals to us naturally, because we ourselves are products of an optimization algorithm called natural selection. As AI gains access to data from other facets of our lives, more decisions that we used to mull over will be outsourced to predictive models. One can imagine a life where a softmax classifier built on vectorized representations of personalities decides one’s optimal school, optimal major, optimal spouse, optimal dog.

Can life be optimized, and if so, should our lives be optimized? Having explored the world of AI, I return to this question again and again. It makes sense to let AI optimize cancer treatments and aircraft flight paths, but what about the paths of our lives? I am confident that, if given the cumulative record of human behavior in numeric form, a recurrent neural network could propose a way of life to optimize quantifiable metrics like net worth, lifespan or the number of felonies. Our lives on autopilot, we will make fewer emotion-induced errors, be harbored from unexpected disaster. All our ugliness will converge toward the perfectly symmetric face. But I believe that such a beauty will be without meaning, will not capture the fractal texture of human history woven by the dream-tormented, love-sick, shit-covered, sea-wandering vessels that are our bodies, will not contain the invaluable lessons learned from the sequence of errors that is life.

There is a line in Cloud Atlas, spoken by an engineered clone when asked if she was happy, to which she replies: “If, by happiness, you mean the absence of adversity, I and all fabricants are the happiest stratum in corpocracy, as genomicists insist. However, if happiness means the conquest of adversity, or a sense of purpose, or the exercise of one’s will to power, then of all Nea So Copros’s slaves we surely are the most miserable.” Although I am not religious, I have faith that such things as happiness cannot be modeled by a loss function. Neuroscientists’ search for a quantifiable measure of happiness may only validate the rationale behind “soma,” the government-issued happiness pill in “Brave New World,” a phenomenon mirrored to a frightening degree by the current opioid crisis in America.

Yes, deep learning will change the world. As AI enters the field of medicine, it will perhaps save many lives. But just as life remains as dark after the invention of the light bulb, AI will not shed light on the most important questions of life. As I look out my window at the gray sky of New Haven, I realize that I, in my late 20s, my career solidifying like putty left out to dry, had sought in AI a kind of rescue, a vision of new life, just as there might have been some who set out for El Dorado in search of a new home. But there are no answers here.

Deep learning won’t change my life. I will continue to climb against the gradient, hand-in-hand with the person I promised to spend the rest of my life with. I will make the same mistakes as all humanity who came before me. I will receive with open arms the unexpected gifts and disasters on my path. Perhaps my life will contain more sadness than joy, but it will be my own. As the Oracle says in “The Matrix,” I did not come here to make a choice. I have already made it. I am here to understand why I made it.

Wyatt Hong | wyatt.hong@yale.edu